Lost In Translation

Presented at The University of Maryland’s Spring 2024 IMD showcase.

In collaboration with Malaya Heflin & Fiza Mulla

To read more about the project itself, check out our project website: Lost in Translation Website

Artist Statement:

"Lost in Translation" is a physical art installation where human participants will enter a "phonebooth" and try to help a "bot", lost and in trouble on phone. This is a play on the frustrating human experience of trying to receive help from corporate AI phone systems, where our exhibition now flips the roles between robot and human. We hope to relay the confusion that miscounnication can bring, particularly in the space of technology, but also relating to the experiences of immigrants from foreign countries. By utilizing speech recognition, language translation, and the words from previous participants, each experience is unique and different from the last, offering an evolving narrative that changes with every person who engages with the installation. The project emphasizes how communication can become distorted, showcasing both the limitations of AI and the human struggle to connect across languages and systems.

We will delved into ciruitry, AI audio distortion, installation art, and storytelling.

My Contributions:

In this project, I had contributions in many different areas. I worked a lot on assembling the physical installation, making purchasing and design choices. I made some contributions to creating the map in the physical installation. Our team worked on the storyline together throughout the development process. However, my main role was the tech lead, creating interactive audio-based scripts. The focus was on providing spoken instructions and processing user responses through speech recognition, text-to-speech conversion, and translation. Below is an outline of the key areas I worked on:

Speech-to-Text Integration:

I integrated the speech_recognition library to allow the system to capture spoken input from the user in real-time. This enabled users to interact with the system by simply speaking, without requiring manual input.

Implemented a function speechToText() to handle voice input and convert it into text for processing.

Interactive Dialogue System:

I enhanced the interaction flow by adding dynamic user prompts and responses, which are controlled based on speech input. This creates a more natural conversation, where the system asks for confirmations and guides the user step by step.

Text Translation:

I incorporated the googletrans library, developing the textTranslated function. This function translates parts of the user’s speech into random languages, creating a more diverse interaction.

Text-to-Speech (TTS) Implementation:

For output, I implemented a textToSpeech function using the gTTS library, which converts text into spoken words. This feature allowed the system to provide verbal feedback to users and facilitate interaction.

Error Handling and Robustness:

I built error-handling mechanisms to deal with speech recognition failures or incorrect responses. For example, if the system could not hear the user properly or if the input was unclear, it would play a prompt asking the user to repeat themselves. Furthermore, I implemented various fallback responses (e.g., "I'm having trouble. I'm just going to go up and left to Willow Creek Avenue") to handle scenarios where the system could not understand the user's direction.

Location-based Navigation Logic:

I developed the logic for location-based navigation, where the system tracks the user’s current location (e.g., "Serenity Circle") and provides specific instructions on how to reach the next location (e.g., "Tranquility Lane"). The directions are generated dynamically based on the user’s speech input, allowing the system to guide the user through multiple possible routes.

Optimization and Memory Management:

I implemented memory management techniques such as the gc.collect() function to optimize the system's memory usage, ensuring that the program runs smoothly over extended periods without performance degradation.

I also optimized the flow by handling multiple iterations of speech recognition and responses without overloading system resources.

Tools Used:

• Western Electric telephone

• Focusrite Scarlett Audio Interface

• Soldering Station

• Circuit Board

• Google Translate API

• Speach Recoginition API

• Led Lighting fixture

Learned soldering techniques to rewire a Western Electric rotary phone. The microphone input is redirected into a computer, processed through a Python script, and sent back out the phone's speaker.

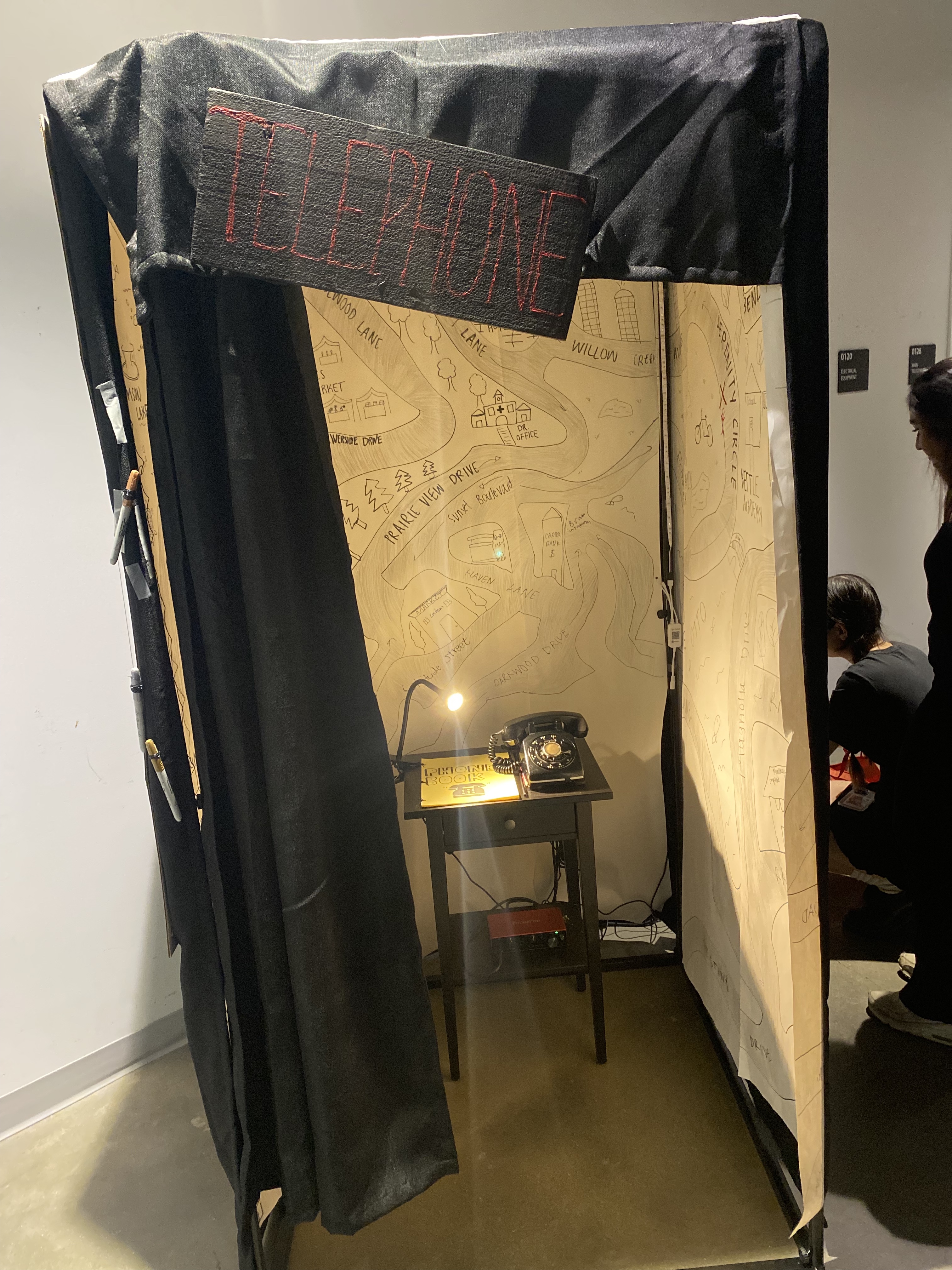

Process of creating the physical installation. The inside walls incorporate a map. The user has to guide the bot on the phone through the map to get home. The responses from the bot are dependent on the user's audio.

Progression of memories of past users being written on the wall. This segment of the peice was supposed connect the different users of the experience. The bot shared previous memories as its own and asked the next user to write it on the wall. This was to show the deterioration of language as it is translated and processed.

Images of the final installation.